INDUSTRIAL AI: HOW DEEP LEARNING COMPLEMENTS MACHINE VISION SYSTEMS

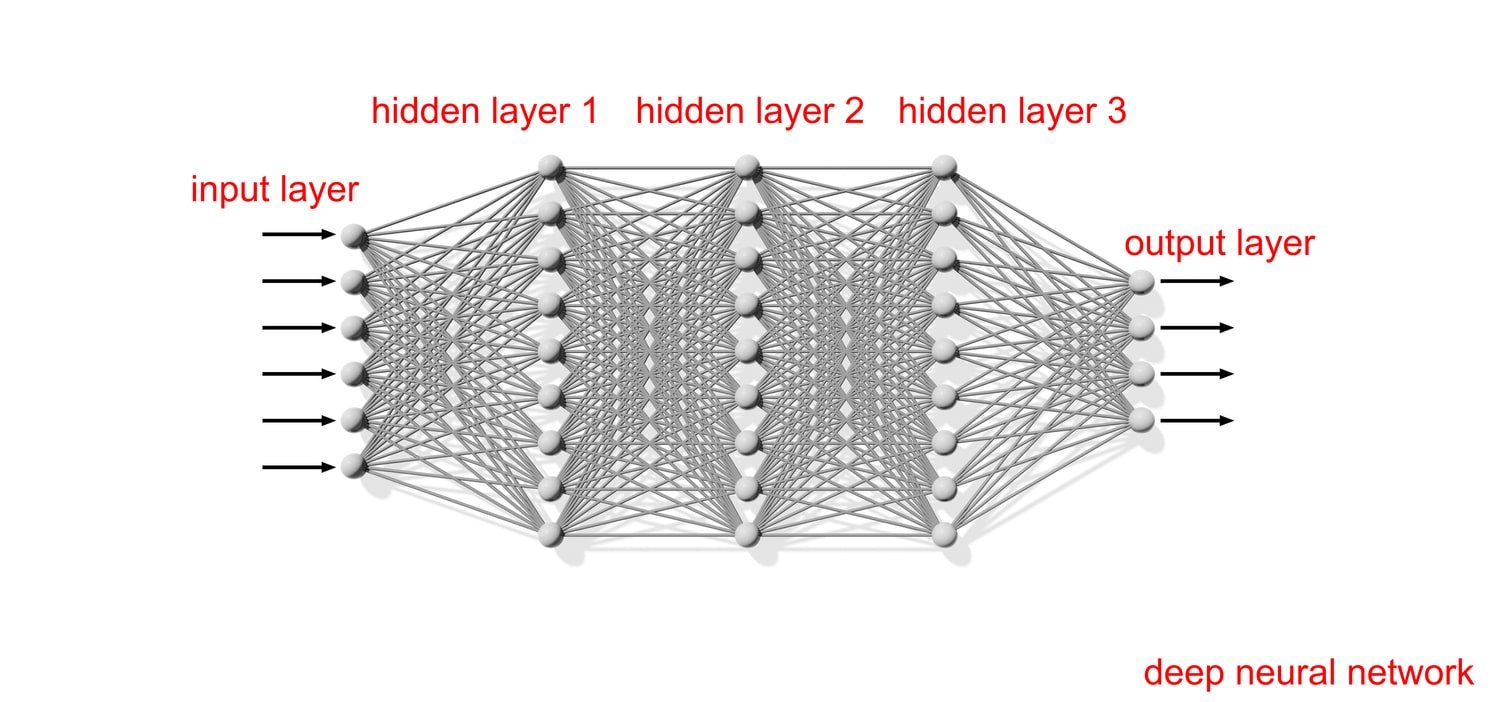

Within the realm of industrial automation, artificial intelligence (AI) has become quite the buzzword. Hype aside, AI has carved out a niche in the industrial space, offering tremendous value on the plant floor when properly designed and implemented. Deep learning — a subset of AI that uses neural networks such as convolutional neural networks (CNNs) that mimic the learning process of the human brain — has emerged as a popular industrial AI tool for its ability to aid in subjective inspection decisions and in inspecting scenes where identifying specific features proves difficult due to high variability or complexity.

When it comes to choosing a deep learning solution, manufacturers have several options, including programming a solution in-house, leveraging establish frameworks such as PyTorch or TensorFlow. Manufacturers also have the option of purchasing an off-the-shelf solution or opting for an application-specific AI-enabled system or product.

Deep learning, however, is not a standalone technology. Rather, when deployed alongside traditional machine vision techniques, it becomes a powerful tool for automated inspection that enhances the overall capabilities of industrial automation systems deployed in applications including electronics and semiconductor inspection, consumer packaged goods inspection and automotive inspection.

Applications

DEEP LEARNING: A TOOL FOR THE MACHINE VISION TOOLBOX

Machine vision refers to a set of hardware — including cameras, optics, and lighting — that, along with the appropriate software, helps businesses execute certain functions based on the image analysis done by the machine vision system. The four major categories of machine vision applications are inspection, identification, guidance, and gauging, and at the heart of all systems is the software, which helps drive component capability, reliability, and usability.

Traditional machine vision software refers to discrete, rules-based algorithms capable of a significant amount of automated imaging tasks. Examples of general machine vision algorithms include edge detection, image transformation, content statistics, correlation, geometric search, OCR/OCV, and correlation. When it comes to incorporating deep learning into a machine vision system, it’s important to remember that deep learning cannot be deployed into all machine vision applications.

In certain applications, of course, deep learning can fill in the gaps where traditional approaches may not. For example, a machine vision system inspecting a weld will look for specific details on the image of the weld, but since each weld is unique, it’s difficult for the software to algorithmically define. Deep learning, on the other hand, can identify features that are more subjective, similar to how a person would inspect a weld. A slightly amorphous weld that does not look the same as a golden image does not necessarily equate to a defective weld.

Fig 1: Modeled after the human brain, neural networks are a subset of machine learning at the heart of deep learning algorithms that allow a computer to learn to perform specific tasks based on training examples.

For deep learning to achieve any level of success requires the creation of a high-quality dataset. Manufacturers can create an initial dataset by uploading images depicting classes of defects or features that must be detected along with the “good images.” A team of subject matter experts can label the initial dataset and train the model, validate results with test images from the original dataset, test the performance in production, and retrain the model to cover any new cases, features, or defects. Once this has been done and all factors have been considered for the implementation of deep learning into a machine vision system, the software can begin helping with defect detection, feature classification, and assembly verification, as well as with inspecting complex or highly variable scenes where identifying specific features may prove difficult, such as bin picking with mixed parts or products.

DEEP LEARNING CAMERA CONSIDERATIONS

Though perhaps a cliché, it’s true that when it comes to developing a deep learning application, it’s “garbage in, garbage out.” Like traditional machine vision approaches, deep learning applications cannot overcome poor lighting or optical design, and still require high-quality images to create a quality data set for training and labeling. This means an implementing either an optimal lighting setup to provide sufficient contrast on images or, in some cases, a specialized lighting scheme. For example, certain applications may require multiple inspections on a single part, which means different lighting setups for things such as presence/absence or checking the size or shape of an object.

Of course, while lighting is critical to success in any machine vision or deep learning application, the system revolves around the camera’s ability to capture images at necessary speeds and resolutions. Images must be the correct resolution for the target application with the best possible feature contrast —but several other application constraints and considerations must also be factored in. These include size, weight, power, the speed of the object being imaged, streaming vs. recording, color vs. monochrome, minimum defect size, and minimum field of view.

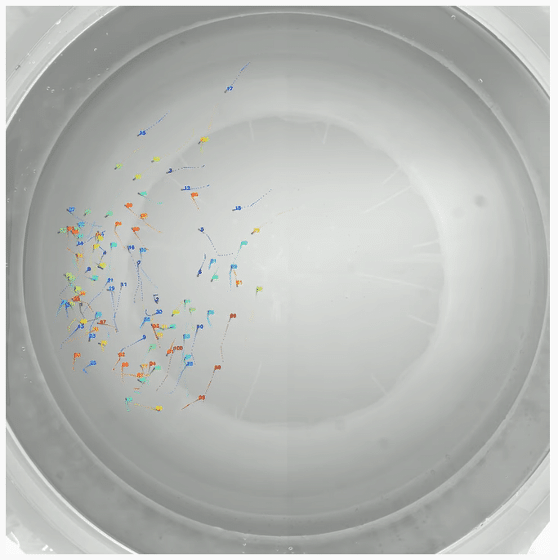

High-speed applications where a part or subject is moving very fast and requires the system to freeze the action of the object to analyze details will require higher-speed cameras, while a system requiring high levels of details will require a camera with a high-resolution image sensor. Emergent Vision Technologies specializes in the design and development of industrial high-speed cameras that deliver some of the highest frame rates and resolutions available in the machine vision market today.

Fig 2: The intracker.ai tool identifies and tracks the position of individual, unmarked animals regardless of the species or size of the animals. In this application, researchers used 20MP 10GigE cameras from Emergent to track a collective of 100 juvenile zebrafish with 99.9% accuracy.

Leveraging the established GigE Vision and GenICam standards, Emergent machine vision cameras can be integrated seamlessly into compliant software applications without the need for proprietary acquisition hardware or cabling. Emergent’s 10GigE, 25GigE, and 100GigE cameras offer resolutions beyond 100MP+ and frame rates near 3500fps for applications where large amounts of data must be generated, then quickly and reliably transmitted to a host device such as an industrial PC.

By using Ethernet as the connectivity interface, Emergent cameras deliver connectivity speeds of up to 100Gbps without sacrificing sensor resolution or speeds. In addition, the cameras can be synchronized using IEEE1588 PTP for high-speed image capture of an event at under 1µs with no CPU utilization and the absolute minimum CPU utilization, memory bandwidth, latency, and jitter at cable lengths of up to 10KM.

INFERENCE

The below video shows how easily one can add and test their own trained inference model to perform detection and classification of arbitrary objects. Simply train your model with PyTorch or TensorFlow and add this to your own eCapture Pro plug-in. Then instantiate the plug-in, connect to your desired camera and click run—it does not get easier than this.

With well trained models, inference applications can be developed and deployed with many Emergent cameras on a single PC with a couple of GPUs using Emergent’s GPU Direct functionality – nobody does performance applications like Emergent.

CUSTOM PLUG-IN DEVELOPMENT

In this short clip we illustrate the process whereby customers can create their own custom plug-ins which can be subsequently loaded in eCapture Pro and real time results generated. We note that any plug-in can be fed my one or multiple cameras for testing total system throughput towards achieving maximum system density.

GPUDIRECT: ZERO-DATA-LOSS IMAGING

Many applications today rely on NVIDIA GPUs for deep learning tasks to handle the compute power requirements. Emergent’s machine vision cameras are also compatible with NVIDIA GPUDirect technology, which enables the transfer of images directly to GPU memory. For one Emergent customer leveraging GPUDirect technology, this meant deploying a system based on Bolt HB-12000-SB 25GigE cameras, one server and CPU, two dual-port 100G network interface cards (NICs), one 24x port switch, and two NVIDIA GPUs.

In this system, the customer deployed 24x HB-12000-SB cameras, which feature the 12.4MP Sony Pregius S IMX535, capturing images at 60fps and sending the data directly to the GPUs, resulting in zero data loss imaging with zero CPU utilization and zero memory bandwidth. With H.265 compression, the images can also be stored locally on disk as well as streamed to real-time messaging protocol (RTMP) clients such as YouTube. Leveraging innovative processing technologies such as GPUDirect allows end users to acquire, transfer, and process large amounts of data quickly and efficiently without worrying about the loss of frames.

EMERGENT GIGE VISION CAMERAS FOR AI AND DEEP LEARNING APPLICATIONS

| Model | Chroma | Resolution | Frame Rate | Interface | Sensor Name | Pixel Size | |

|---|---|---|---|---|---|---|---|

|

HR-1800-S-M | Mono | 1.76MP | 660fps | 10GigE SFP+ | Sony IMX425 | 9×9µm |

|

HR-1800-S-C | Color | 1.76MP | 660fps | 10GigE SFP+ | Sony IMX425 | 9×9µm |

|

HR-5000-S-M | Mono | 5MP | 163fps | 10GigE SFP+ | Sony IMX250LLR | 3.45×3.45µm |

|

HR-5000-S-C | Color | 5MP | 163fps | 10GigE SFP+ | Sony IMX250LQR | 3.45×3.45µm |

|

HR-12000-S-M | Mono | 12MP | 80fps | 10GigE SFP+ | Sony IMX253LLR | 3.45×3.45µm |

|

HR-12000-S-C | Color | 12MP | 80fps | 10GigE SFP+ | Sony IMX253LQR | 3.45×3.45µm |

|

HR-25000-SB-M | Mono | 24.47MP | 51fps | 10GigE SFP+ | Sony IMX530 | 2.74×2.74μm |

|

HR-25000-SB-C | Color | 24.47MP | 51fps | 10GigE SFP+ | Sony IMX530 | 2.74×2.74μm |

For additional camera options, check out our interactive system designer tool.